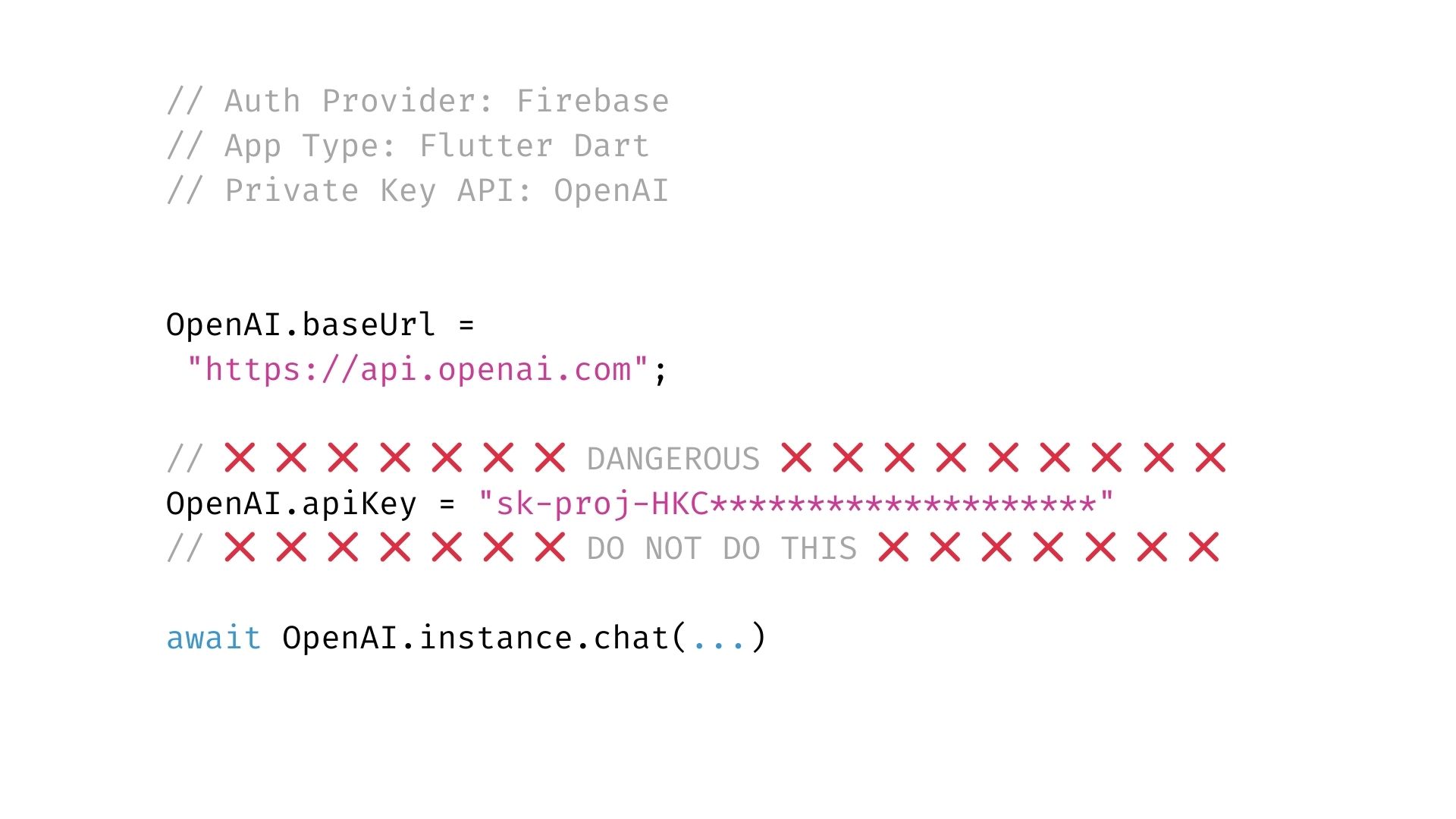

Don't ship LLM API keys in your app

Hide LLM secret keys in your app and avoid leaks that lead to thousands of dollars in LLM API costs 💸🚨

Use an open-source, battle-tested backend to protect your LLM API key

🛡️

JWT Authentication

Requests are verified with JWTs from the app's authentication provider so only your users have access to the LLM API via Backmesh.

🚧

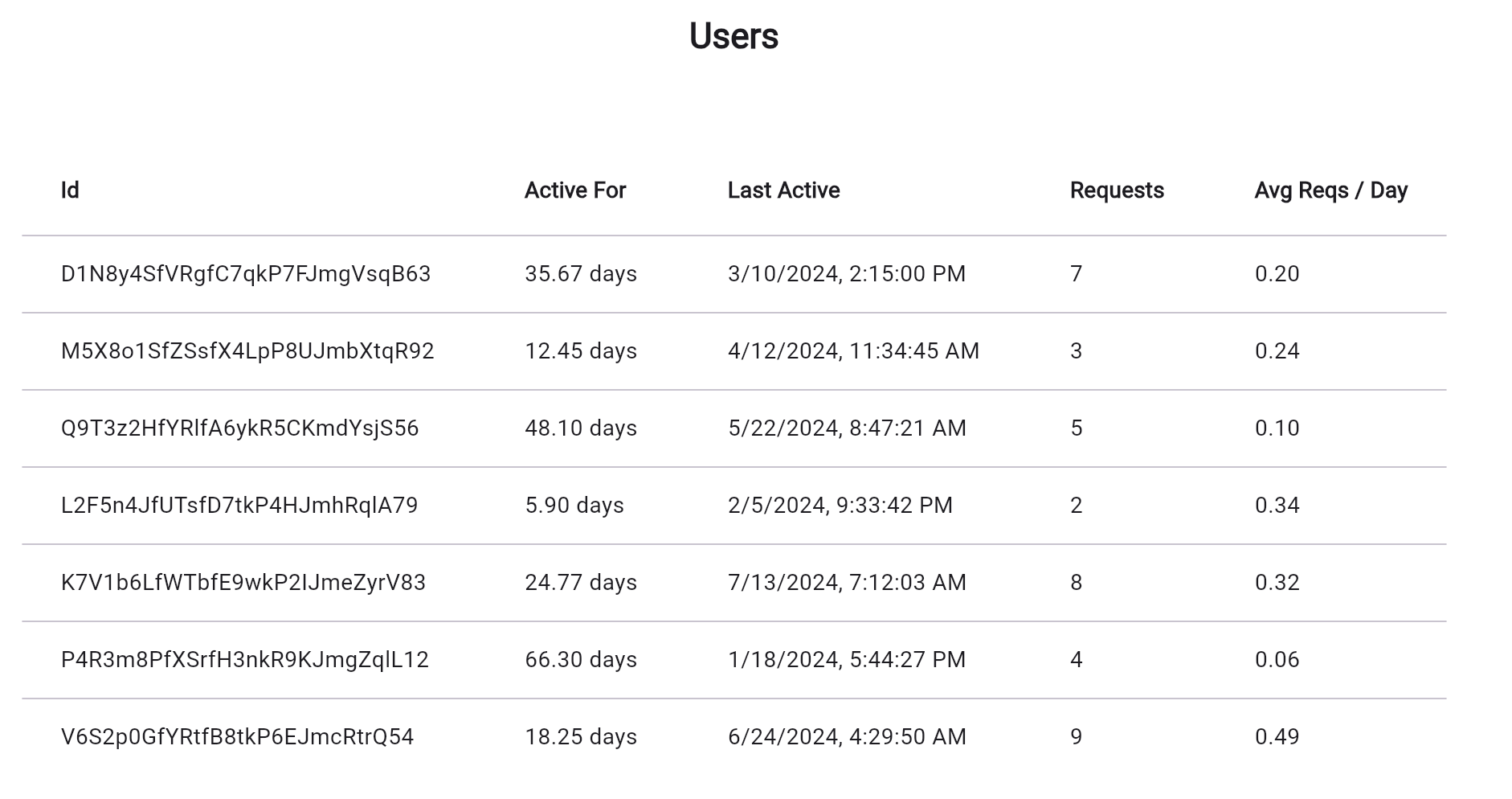

Rate limits per user

Configurable per-user rate limits to prevent abuse (e.g. no more than 5 OpenAI API calls per user per hour).

What is Backmesh

Backmesh is an open-source, thoroughly tested backend that uses military grade encryption to protect your LLM API key and offer an API Gatekeeper to let your app safely call the API

LLM User Analytics without packages

All LLM API calls are instrumented so you can identify usage patterns, reduce costs and improve user satisfaction within your AI applications.